Discovering Quality

This entry is Part 3 out of a 4 part series on quality. Here is Part 1, Part 2 and Part 4.

Whether searching for quality tangible, digital or mental, the arrival of the internet unlocked an unprecedented mechanism for discovering quality. What the internet allowed for was centralised places from which to compare everything, and yet was in and of itself entirely decentralised.

By storing such copious amounts of data publicly, the internet didn't just only allow for comparison, but also discussion, recommendation and most importantly, discovery. In fact, I would argue that discovering quality is one of the primary purposes of the internet.

As far as I can see, there have arisen three primary means of discovering quality on the internet: aggregators, algorthims and serendipity. Interestingly, each of these yield progressively more personalised results.

Aggregators, including search engines like Google and online stores like Amazon, work by taking every piece of data on the internet and stacking them according to how popular those particular sites are. In theory, quality content should be found at the top of every search.

Algorithms, including content recommendors like Netflix and social medias like Instagram, work by serving content according to your interests, preferences and overall taste. In theory, quality content should be steered directly into your feed.

Compared to aggregators and algorithms, serendipity is far more simple and far more unpredictable. And yet comparably, serendipity is somehow far more satisfying ‒ maybe because the discovery becomes something personal. Serendipity relies upon digital gardens, and involves exploring rabbit holes, aggressively sampling content and doubling down on existing sources of quality.

As a means of discovering quality, all three of these means are highly flawed.

While each of these means of discovering quality have their own advantages and disadvantages, the main issue we are currently facing lies not with them, but with the internet itself. In essence, the issue comes down to this: quality has become increasingly more difficult to find.

Why is this? Well for one, as the years have gone by, the internet has become a lot more... crowded. More websites, more content creators, more copycats, more aggregators, more algorithms, more everything. The internet has become a bustling, bursting place, and many have begun to see the impact of this.

This is the original sin of modernity. We have cleared access, but at the cost of discovery. — Rohit Krishnan, Discovery is the original sin of the modern age

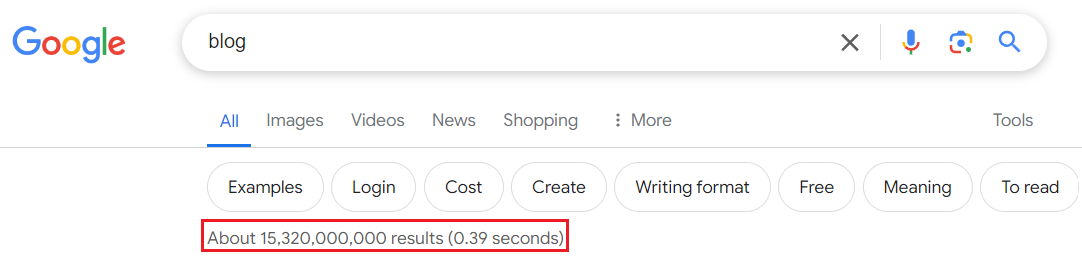

In terms of aggregators, Google and other search engines have become exceedingly broken, partly due to results being marred by so-called sponsored recommendations, and partly due to the diminishing returns on more data over time. For example, if you search 'blog' on google you will get more than 15 billion hits (in 0.39 seconds thank you very much). To make matters worse, the first 17 of them are't even actual blogs. How on earth are we supposed to find a needle amoungst such sheer volume of hay?

In terms of algorithms, the same problem of volume comes into play, with the additional and more underlying problem being that content is focused more on entertainment rather than utility. For both cases, the problem is only going to get worse with the additional proliforation of AI generated content.

Whether due to, or even because of the proliforation of internet content, quality has seemingly retreated from our aggregators, our algorithms and even our serendipitous exploration. Apart from sheer volume, this phemenon is best explained by the dark forest theory of the internet, which compares the internet to a dark forest at night. Although it may seem lifeless, in actuality it is teeming with life; the reason it seems lifeless is because all life remains silent so as not to be detected by predators.

In response to the ads, the tracking, the trolling, the hype, and other predatory behaviors, we’re retreating to our dark forests of the internet, and away from the mainstream. — Yancey Strickler, The Dark Forest Theory of the Internet

Notice that the retreat is away from the mainstream.

Although mainstream quality may exist (it remains to be seen), for any content to appeal to the mainstream in the first place, it's going to have to be fairly basic ‒ this is because simple = accessible .

.

To double down on this point, let me elaborate slightly by saying that the quality I've been referring to is in actuality niche quality. On the most part, nicheness seems kind of inherent within quality. This is because depth and complexity of ideas thrive in niches, not wide open spaces. Specialisation, not generalisation.

So, if mainstream quality (lit. low quality) is found on the mainstream internet, then niche quality (lit. high quality) is going to be found on the niche internet. While broad, mainstream content is generously exhibited by aggregators and algorithms alike, niche internet content remains far more difficult to find.

While traditional methods are fairly ineffective at facilitating the discovery of quality, there are plenty of solutions both already existing and steadily emerging. Each of these combine elements of aggregators, algorithms and seredipitous exploration, to varying degrees of success.

Link Aggregators

In terms of presently-existing solutions, one possibility are link aggregators. By focusing on one particular niche, link aggregators take the benefits of both curation and vertical aggregation. The most prominent example is HackerNews, which focuses primarily on tech. While undeniably useful, link aggregators do lack a certain element of personalisation, especially when the niche focus ends up being frustratingly broad.

Taste Makers

To counter the underpersonalisation of aggregators without becoming a slave to the algorithms, the most sensible approach seems to be relying on taste makers. This involves determining your own taste, and then by trial and error (tasting content) finding individuals who share a similar taste. By 'share a similar taste', I both mean that they share a similar taste to you and that they share the content which they find/produce.

This approach is very similar to relying serendipity; the difference is that you are not just randomly sampling content, but instead progressively collecting reliable sources of content. As a complement to this approach, subscribing to newsletters is a tried and trued method of routing such content directly into your inbox (for example the likes of Tim Ferris's 5 bullet Friday).

Start a Blog

Finally, a more abstract solution is to start a blog, as considered by the highly self-explanatorily named piece a blog post is a very long and complex search query to find fascinating people and make them route interesting stuff to your inbox. Basically, by writing about some niche area which you are interested in, individuals who share a similar interest in taste will, to a greater or lesser degree, reciprocate. Unfortunately not everyone has the inclination to write, and of those who do, not all enjoy the same amount of success as others.

Boutique Search Engines

In terms of forward-thinking solutions, one promising solution are boutique search engines. Not quite aggregators and not quite algorithms, boutique search engines are curated, searchable interfaces. Existing examples include Doordash, Airbnb and Behance.

Unlike vertical search aggregators, boutique search engines feel less like yellow pages, and more like texting your friends to ask for a recommendation. They have constrained supply, which is the foundation for their biggest moat — trust. Importantly, boutique search engines introduce new business models that don’t rely on advertising. — Sari Azout, Re-Organizing the World’s Information: Why we need more Boutique Search Engines

Although boutique engines make complete sense if you know what you're looking for, I'm unsure how effective they will be for open-ended and playful exploration.

Community Network Search Engines

The primary example of these is The Giga Brain, which works as a search engine for Reddit.The internet has been mainstream for over 30 years but most human knowledge is still offline. There are still questions that Google and ChatGPT can't answer well. In way too many cases, Google searches return an endless list of crappy content farm blogs that feel totally untrustworthy. And ChatGPT, although great for coding questions or wikipedia facts, isn't ready to answer questions that seek experience-based or subjective information.

For now, we turn to communities like reddit to find the people that are really into any given niche who can give us specific, opinionated, experience-based answers. That said, reddit's own search is really bad, and with over 300,000 communities, its hard to know where to start when seeking info from redditors.

Though still in its early stages, software like The Giga Brain shows tremendous potential as a capturer of knowledge, not just information. It also does well to provide summarised in packages as oppose to inaccessible information dumps.

AI powered Search Engines

AI powered Search Engines work similar to traditional search engine aggregators, but are able to filter out much of the noise, focusing not on advertising, SEO or page position, but whether the content provided is the best possible fit. Currently, the most powerful of these is Exa.ai, and of all the current search alternatives on the market, Exa.ai is by far the best humanity has to offer in terms of usefulness and utility. Filters enable some level of customisability and personalisation, while a link predictor transformer is used to predict match meaning within prompts.

While each of these approaches are improvements on their predecessors and forebearers, they still remain a little ways off from the perfect tool for discovering quality. It would have to be a curated, personalised, aggregated platform, with the potential for both playful exploration and effective utility. It would have to have a sleek, intuitive interface, where noise and signal are minimised, if not eliminated entirely. It would have to deliver content without either the paradox of choice or content being shoved down your throat. Impossible... For now.

← Measuring Quality Creating Quality →